Hello, I’m trying to create a dynamic pipeline that has a trunk that encodes the framebuffer with the omxh264enc element and multiple branches, one for writing to a file and one for sending the encoded data over UDP. The pipeline will look something like this:

gst-launch-1.0 multifilesrc location=/dev/fb0 ! \

rawvideoparse format=8 width=1024 height=768 framerate=25/1 ! \

videoconvert ! \

video/x-raw,format=NV12 ! \

omxh264enc target-bitrate=16384 qp-mode=auto entropy-mode=CABAC min-qp=10 max-qp=51 periodicity-idr=4 ! \

video/x-h264,profile=high ! \

tee name=t ! \

queue ! \

identity eos-after=512 ! \

h264parse ! \

mp4mux ! \

filesink location=test.mp4 \

t. ! \

queue ! \

rtph264pay name=pay0 pt=96 ! \

udpsink host=192.168.1.10 port=6666

This works fine with gst-launch-1.0. I created a C++ application to create the trunk and add branches to the tee after the pipeline was playing. When I attach a branch consisting of queue ! fakesink to the tee, the pipeline gets paused and the omxh264enc element won’t push any more buffers when the pipeline is set back to playing. I think this is an issue with the omxh264enc element, if I remove the omxh264enc element I can add and remove branches and everything works.

This is running on an embedded system using a Zynq Ultrascale+ and the omxh264enc element is using the embedded VCU hardware.

If I set the pipeline to NULL before adding the branch and back to playing after everything works ok, but it sometimes takes ~5s to set the pipeline to NULL which is unacceptable in our use case. Also, I can’t put the omxh264enc element after the tee because the hardware we’re using doesn’t have enough memory bandwidth to support more than one encoding stream at a time.

Here is a code snippet showing how I attach a branch to the tee.

bool GStreamerBranch::attachToPipeline(GstElement *pipeline, GstElement *tee) {

// Get src pad from trunk tee

PLOG_DEBUG << "Attaching branch " << m_Name.toStdString() << " to pipeline";

// Here only for the dot dump

m_Pipeline = pipeline;

m_Tee = tee;

// Get src pad from trunk tee

GstPadTemplate *templ =

gst_element_class_get_pad_template(GST_ELEMENT_GET_CLASS(tee), "src_%u");

m_TeeSrcPad = gst_element_request_pad(tee, templ, NULL, NULL);

GST_DEBUG_BIN_TO_DOT_FILE_WITH_TS(GST_BIN(m_Pipeline), GST_DEBUG_GRAPH_SHOW_ALL,

QString("%1_request_pad").arg(m_Name).toUtf8());

// Add all elements to the pipeline

if (!gst_bin_add(GST_BIN(pipeline), m_Queue)) {

PLOG_ERROR << "Failed to add element " << GST_OBJECT_NAME(m_Queue);

return false;

}

for (GstElement *element : m_Elements) {

if (!gst_bin_add(GST_BIN(pipeline), element)) {

PLOG_ERROR << "Failed to add element " << GST_OBJECT_NAME(element);

return false;

}

}

GST_DEBUG_BIN_TO_DOT_FILE_WITH_TS(GST_BIN(m_Pipeline), GST_DEBUG_GRAPH_SHOW_ALL,

QString("%1_add_elements").arg(m_Name).toUtf8());

// Link our elemets together

// Get first element

GstElement *prevElement = m_Queue;

for (GstElement *element : m_Elements) {

gst_element_link(prevElement, element);

prevElement = element;

}

GST_DEBUG_BIN_TO_DOT_FILE_WITH_TS(GST_BIN(m_Pipeline), GST_DEBUG_GRAPH_SHOW_ALL,

QString("%1_link_elements").arg(m_Name).toUtf8());

// Sync elements with parent

if (!gst_element_sync_state_with_parent(m_Queue)) {

PLOG_ERROR << "Failed to sync queue with parent";

return false;

}

for (GstElement *element : m_Elements) {

if (!gst_element_sync_state_with_parent(element)) {

PLOG_ERROR << "Failed to sync " << gst_element_get_name(element) << " with parent";

return false;

}

}

GST_DEBUG_BIN_TO_DOT_FILE_WITH_TS(GST_BIN(m_Pipeline), GST_DEBUG_GRAPH_SHOW_ALL,

QString("%1_sync_elements").arg(m_Name).toUtf8());

// Attach tee src pad to queue sink pad

GstPad *queueSinkPad = gst_element_get_static_pad(m_Queue, "sink");

gst_pad_link(m_TeeSrcPad, queueSinkPad);

gst_object_unref(queueSinkPad);

GST_DEBUG_BIN_TO_DOT_FILE_WITH_TS(GST_BIN(m_Pipeline), GST_DEBUG_GRAPH_SHOW_ALL,

QString("%1_link_tee_queue").arg(m_Name).toUtf8());

return true;

}

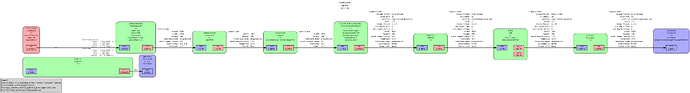

The dot file created after the gst_element_sync_state_with_parent calls shows that the pipeline is paused ([=]->[=]), with the newly added fakesink showing [-]->[>].

Is there any way to not pause the pipeline when adding a branch to the tee?

Maybe I’m missing a step adding the branch?

Thanks for any help!