I use NVIDIA hardware decoding in thread, but it did not release the graphics memory properly

i released gpu memory , when I encountered rtsp GST_MESSAGE_EOS, but it seems don’t work

I think it’s hard to know what the problem is here, without seeing the rest of your code.

Do you have a minimal reproducer application/code that shows the problem?

Since you’re using appsink, could it be that you’re not releasing the samples/buffers?

Have you tried using the GStreamer leak tracer? (will require gst_deinit() at the end of your app)

//GstDecoder.h

#include <opencv2/opencv.hpp>

//#include "videoDecoder.h"

#include "yuv2bgr.h"

#include <cuda_runtime.h>

#include <cuda_profiler_api.h>

#include <curand.h>

#include "dataModule.hpp"

#include <unistd.h>

extern "C"

{

#include <gst/gst.h>

#include <gst/app/gstappsink.h>

#include <gst/rtsp/gstrtsptransport.h>

#include <glib.h>

}

typedef struct _custom_data

{

/* pipeline{source->rtpdepay->rtp_queue->h264parse->hard_decoder->cudaconvert->cudadowload->video_queue->sink} */

GstElement *pipeLine,

*source, *rtpDepay, *rtpQueue,

*h264Parse, *h264Queue,

*hardDecoder, *imgFilter,

*cudaConvert, *cudaDownload, *videoQueue, *sink;

std::string Rtsp;

int exitFlag;

int blockId;

dataModule::dataChart mPicChart_;

cv::Mat temp;

} CustomData;

class gstDecoder

{

public:

CustomData *data;

gstDecoder(char *rtsp_url);

~gstDecoder();

int initGstEnv();

int getDecodeFrame(cv::Mat &frame, double ×tamp);

void setImgSize(int mWidth, int mHeight);

void setGstPipeLineName(char *pipelinename);

void setConfig(int block_id, dataModule::dataChart &picChart_);

int readFrame(cv::Mat &frame, double ×tamp);

protected:

bool stopFlag;

char *rtspUrl;

std::string srcFmt;

int paddedWidth = 0;

int paddedHeight = 0;

int width = 0;

int height = 0;

cv::Mat image;

bool isFirstFlag;

bool exitFlag;

int blockId;

int recordNum;

char *pipeLineName;

int saveNum;

// cv::cuda::GpuMat reqMat,resMat; //gpu Mat数据

// cv::cuda::GpuMat matChannel4;

// CustomData *data;

std::pair<int, int> framerate;

};

//This is gst decoder c++ class

#include <GstDecoder.h>

void pad_added_handler(GstElement *src, GstPad *new_pad, GstElement *rtph264depay)

{

//gstData->rtph264depay sink pad

GstPad *sink_pad = gst_element_get_static_pad(rtph264depay, "sink");

GstPadLinkReturn ret;

GstCaps *p_caps;

gchar *description;

GstCaps *new_pad_caps;

GstStructure *new_pad_struct;

const gchar *new_pad_type;

if(gst_pad_is_linked(sink_pad))

{

//g_print("We are already linked \n");

goto exit;

}

p_caps = gst_pad_get_pad_template_caps(new_pad);

description = gst_caps_to_string(p_caps);

//g_print("new pad caps: %s\n", description);

g_free(description);

if(NULL != p_caps)

gst_caps_unref(p_caps);

new_pad_caps = gst_pad_get_current_caps (new_pad);

new_pad_struct = gst_caps_get_structure (new_pad_caps, 0);

new_pad_type = gst_structure_get_name (new_pad_struct);

// rtph264depay application/x-rtp

if(!g_str_has_prefix (new_pad_type, "application/x-rtp"))

{

//printf("not application x rtp\n");

goto exit;

}

ret = gst_pad_link (new_pad, sink_pad);

if (GST_PAD_LINK_FAILED(ret))

{

g_print("Type is '%s' but link failed.\n", new_pad_type);

}

else

{

//g_print("Link succeeded (type '%s').\n", new_pad_type);

}

if (NULL != new_pad_caps)

gst_caps_unref(new_pad_caps);

exit:

if(sink_pad != NULL)

{

//printf("sink pad is not null\n");

gst_object_unref(sink_pad);

}

//gst_object_unref(sink_pad);

}

// void readFrame_cllback(GstElement *src, gpointer user_data)

// {

// CustomData *pData = (CustomData *)user_data;

// //printf("readFrame call back is %d\n",pData->blockId);

// //cv::Mat temp;

// GstSample *sample = nullptr;

// gsize data_size = 0;

// gsize stream_size = 0;

// // copy from map

// g_signal_emit_by_name(src, "pull-sample", &sample);

// if (sample){

// GstBuffer *buffer = gst_sample_get_buffer(sample);

// if (buffer){

// GstMapInfo map;

// if (gst_buffer_map(buffer, &map, GST_MAP_READ)){

// //char buf[4096] = {0};

// //printf("data size is %d\n", map.size);

// if(map.size == 0)

// return;

// memcpy(pData->temp.data, map.data, map.size);

// //printf("get block %d image\n", pData->blockId);

// //cv::imwrite("15.jpg", pData->temp);

// pData->mPicChart_.writeGroupData(DATABLOCK_ID_MAT, pData->blockId, &pData->temp);

// gst_buffer_unmap(buffer, &map);

// }else{

// printf("fgst_buffer_map error failed!!!\n");

// }

// }else{

// printf("gst_sample_get_buffer failed!!!\n");

// }

// gst_sample_unref(sample); // release sample reference

// }else{

// printf("sample is null...\n");

// }

// return;

// }

static void *ErrHandle(void *arg) {

pthread_detach(pthread_self());

//printf("start errhandle\n");

CustomData *pData = (CustomData *)arg;

GstBus *bus = gst_element_get_bus(pData->pipeLine);

GstMessage *msg = gst_bus_timed_pop_filtered(bus, GST_CLOCK_TIME_NONE, (GstMessageType)(GST_MESSAGE_ERROR | GST_MESSAGE_EOS));

//GstMessage *msg = gst_bus_timed_pop_filtered(bus, 100 * GST_MSECOND, (GstMessageType)(GST_MESSAGE_STATE_CHANGED | GST_MESSAGE_ERROR | GST_MESSAGE_EOS));

GstStateChangeReturn ret;

// Message handling

if (msg != nullptr) {

switch (GST_MESSAGE_TYPE(msg)) {

case GST_MESSAGE_ERROR: {

GError *err = nullptr;

gchar *debug_info = nullptr;

gst_message_parse_error(msg, &err, &debug_info);

std::cerr<< "Error received:" << err->message << std::endl;

g_clear_error(&err);

g_free(debug_info);

pData->exitFlag = -1;

}

break;

case GST_MESSAGE_EOS:

{

pData->exitFlag = -1;

std::cout << "EEEEError: End-Of-Stream reached" << std::endl;

}

break;

default:

std::cout << "Unexpected message received" << std::endl;

break;

}

gst_element_send_event (pData->pipeLine, gst_event_new_eos ());

//gst_message_unref(msg);

}

//gst_bus_post(bus, gst_message_new_eos(NULL));

//GstEvent *event = gst_event_new_flush_start();

//gst_pad_push_event(pad, event);

//event = gst_event_new_flush_stop(TRUE);

//gst_pad_push_event(pad, event);

gst_message_unref(msg);

if(bus)

gst_object_unref(bus);

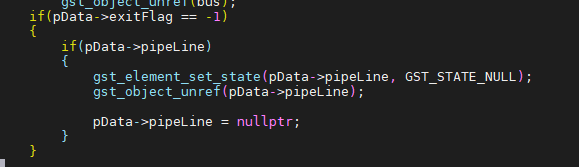

if(pData->exitFlag == -1)

{

printf("exitFlag ==1\n");

//gst_element_set_state(pData->pipeLine, GST_STATE_NULL);

if(pData->pipeLine)

{

//pData->pipeline = nullptr;

printf("pipeline %d is unref ..................\n", pData->blockId);

gst_element_set_state(pData->pipeLine, GST_STATE_NULL);

gst_object_unref(pData->pipeLine);

//gst_element_set_state(pData->hardDecoder, GST_STATE_NULL);

//gst_object_unref(pData->hardDecoder);

//gst_object_unref(pData->cudaConvert);

//gst_object_unref(pData->cudaDownload);

pData->pipeLine = nullptr;

}

}

pthread_exit(NULL);

}

gstDecoder::gstDecoder(char *videoUrl)

:rtspUrl(videoUrl)

{

isFirstFlag = true;

exitFlag = true;

recordNum = 0;

saveNum = 0;

data = (CustomData*)malloc(sizeof(CustomData));

}

void gstDecoder::setConfig(int block_id, dataModule::dataChart &picChart)

{

blockId = block_id;

data->mPicChart_ = picChart;

}

void gstDecoder::setGstPipeLineName(char *pipelinename)

{

pipeLineName = pipelinename;

}

int gstDecoder::initGstEnv()

{

//data = (CustomData*)malloc(sizeof(CustomData));

printf("initGstEnv pipeline name is %s\n",pipeLineName);

data->pipeLine = gst_pipeline_new(pipeLineName);

data->exitFlag = 0;

data->blockId = blockId;

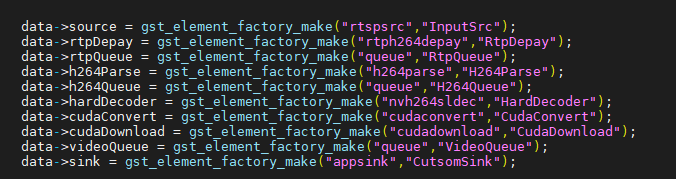

data->source = gst_element_factory_make("rtspsrc","InputSrc");

//rtpBin = gst_element_factory_make("rtpbin", "RtpBin");

data->rtpDepay = gst_element_factory_make("rtph264depay","RtpDepay");

data->rtpQueue = gst_element_factory_make("queue","RtpQueue");

data->h264Parse = gst_element_factory_make("h264parse","H264Parse");

data->h264Queue = gst_element_factory_make("queue","H264Queue");

//data->hardDecoder = gst_element_factory_make("nvh264dec","HardDecoder");

data->hardDecoder = gst_element_factory_make("nvh264dec","HardDecoder");

data->cudaConvert = gst_element_factory_make("cudaconvert","CudaConvert");

data->cudaDownload = gst_element_factory_make("cudadownload","CudaDownload");

data->videoQueue = gst_element_factory_make("queue","VideoQueue");

data->sink = gst_element_factory_make("appsink","CutsomSink");

if(!data->pipeLine || !data->source || !data->rtpDepay || !data->rtpQueue || !data->h264Parse || !data->h264Queue || !data->hardDecoder || !data->cudaConvert || !data->cudaDownload || !data->videoQueue || !data->sink)

{

printf("init all elements failed \n");

return -1;

}

GstRTSPLowerTrans lowerTrans;

lowerTrans = GST_RTSP_LOWER_TRANS_TCP;

g_object_set(G_OBJECT(data->source), "location", rtspUrl, "latency", "200", nullptr);

g_object_set(G_OBJECT(data->source), "protocols", lowerTrans, NULL);

//g_object_set(G_OBJECT(data->source), "timeout", 5000000, NULL);

//g_object_set(G_OBJECT(data->source), "tcp-timeout", 5000000, NULL);

//g_object_set(G_OBJECT(data->source), "teardown-timeout", 300000000, NULL);

//g_object_set(G_OBJECT(sink), "emit-signals", TRUE, "max-buffers", 1, nullptr);

//g_object_set(G_OBJECT(sink),"max-buffers", 10,NULL);

//g_object_set(G_OBJECT(rtspsrc), "transport", GST_RTSP_TRANSPORT_TCP, NULL);

g_object_set(G_OBJECT(data->sink),

"sync", FALSE,

"emit-signals", TRUE,

"caps", gst_caps_new_simple("video/x-raw",

// "width", G_TYPE_INT, 1920,

// "height", G_TYPE_INT, 1080,

// "framerate", GST_TYPE_FRACTION, 10, 1,

"format", G_TYPE_STRING, "BGR", NULL),

NULL);

gst_bin_add_many(GST_BIN(data->pipeLine), data->source, data->rtpDepay, data->rtpQueue, data->h264Parse, data->h264Queue, data->hardDecoder, data->cudaConvert, data->cudaDownload, data->sink, nullptr);

g_signal_connect(data->source, "pad-added", (GCallback)pad_added_handler, data->rtpDepay);

if(gst_element_link_many(data->rtpDepay, data->rtpQueue, data->h264Parse, data->h264Queue, data->hardDecoder, data->cudaConvert, data->cudaDownload, data->sink, nullptr) != TRUE)

{

printf("gst element link failed\n");

return -1;

}

GstStateChangeReturn ret ;

ret = gst_element_set_state(data->pipeLine, GST_STATE_PLAYING);

if (ret == GST_STATE_CHANGE_FAILURE)

{

std::cerr<< "Unable to set the pipeline to the paused state" << std::endl;

gst_object_unref(data->pipeLine);

return -1;

}

GstSample *sample;

g_signal_emit_by_name(data->sink, "pull-preroll", &sample);

if (sample) {

auto buffer = gst_sample_get_buffer(sample);

if (paddedHeight == 0 && paddedWidth == 0) {

GstCaps *caps = gst_sample_get_caps(sample);

GstStructure* info = gst_caps_get_structure(caps, 0);

gst_structure_get_int(info, "width", &paddedWidth);

gst_structure_get_int(info, "height", &paddedHeight);

const char* format = gst_structure_get_string(info, "format");

gst_structure_get_fraction(info, "framerate", &framerate.first, &framerate.second);

srcFmt = format;

//std::cout << "padded width:" << paddedWidth << "padded height:" << paddedHeight << std::endl;

//std::cout << "format:" << srcFmt << std::endl;

//std::cout << "framerate num:" << framerate.first << "framerate den:" << framerate.second << std::endl;

}

// release sample reference

gst_sample_unref(sample);

}

// set pipeline to playing

ret = gst_element_set_state(data->pipeLine, GST_STATE_PLAYING);

if (ret == GST_STATE_CHANGE_FAILURE) {

gst_object_unref(data->pipeLine);

std::cerr<< "Unable to set the pipeline to the playing state" << std::endl;

return -1;

}

int retNum = 0;

pthread_t errThread;

retNum = pthread_create(&errThread, NULL, ErrHandle, data);

if (retNum)

{

fprintf(stderr, "pthread_create error: %s\n", strerror(retNum));

exit(-1);

}

sleep(2);

//pthread_join()

return 0;

}

gstDecoder::~gstDecoder()

{

// gst_bin_remove(GST_BIN(data->pipeLine), data->hardDecoder);

// // gst_object_unref(data->hardDecoder);

// gst_bin_remove(GST_BIN(data->pipeLine), data->cudaConvert);

// gst_bin_remove(GST_BIN(data->pipeLine), data->cudaDownload);

if(data)

{

printf("free data\n");

free(data);

}

}

void gstDecoder::setImgSize(int mWidth, int mHeight)

{

width = mWidth;

height = mHeight;

}

int gstDecoder::readFrame(cv::Mat &frame, double ×tamp)

{

GstSample *sample;

g_signal_emit_by_name(data->sink, "pull-sample", &sample);

if(data->exitFlag == 0)

{

if(sample)

{

auto buffer = gst_sample_get_buffer(sample);

timestamp = static_cast<double>(GST_BUFFER_PTS(buffer)) / static_cast<double>(GST_SECOND);

GstMapInfo map;

if(gst_buffer_map(buffer, &map, GST_MAP_READ))

{

//printf("map size is %d\n",map.size);

if(srcFmt != "NV12" && srcFmt != "I420" && srcFmt != "BGR" && srcFmt != "RGB")

{

printf("unsupport src pixel format \n");

return -1;

}

if(isFirstFlag)

{

if(image.empty())

{

image.create(height, width, CV_8UC3);

isFirstFlag = false;

}

}

if(paddedWidth == width)

{

memcpy(image.data,map.data, map.size);

}

else

{

for (int i = 0; i < height; ++i)

{

memcpy(image.data + i * width, map.data + i * paddedWidth, width * sizeof(uint8_t));

}

// copy UV-channel, jump the padding width

for (int i = 0; i < height / 2; ++i)

{

memcpy(image.data + (height + i) * width, map.data + (paddedHeight + i) * paddedWidth, width * sizeof(uint8_t));

}

}

if(srcFmt == "BGR")

{

frame = image;

}

else

{

cv::cvtColor(image, frame, cv::COLOR_YUV2BGR_I420);

}

gst_buffer_unmap(buffer,&map);

}

gst_sample_unref(sample);

return 0;

}

else

{

printf("sample is null\n");

return -1;

}

}

else

{

return -1;

}

}

When decoding is running normally, the graphics memory will not rise, but when encountering RTSP eos, the graphics memory will not be released

if i use gst_bin_remove(GST_BIN(data->pipeLine), data->cudaConvert);

this warning:

Trying to dispose element CudaConvert, but it is in PAUSED instead of the NULL state