I’m new to GStreamer and I’m working with GStreamer-Sharp, Visual Basic and Visual Studio 2022. I’m trying to create a simple test application that prepares a sequence of square, greyscale images (ie. video frames) at 7.2fps for presentation to GStreamer via an appsrc, for encoding with x264enc and streaming as RTP over UDP. My pipeline:

appsrc ! video/x-raw,format=GRAY8,width=256,height=256,framerate=72/10 ! x264enc tune=zerolatency qp-max=0 key-int-max=72 bframes=3 intra-refresh=1 noise-reduction=200 ! rtph264pay pt=96 ! udpsink host=127.0.0.1 port=5000

From the x264enc log I can see that video data is arriving and being compressed. However, this activity stops after approximately 50 frames. After a further 4 frames, appsrc begins emitting enough-data signals, presumably because the x264enc is no-longer taking any data and the appsrc’s input buffer has filled.

Looking at the rtph264pay log, I see only a single input-frame. The udpsink log is empty. It’s as though rtph264pay and udpsink are not linked.

I’m hoping that someone would be kind enough to take a look at my code to see if they can spot my mistake?

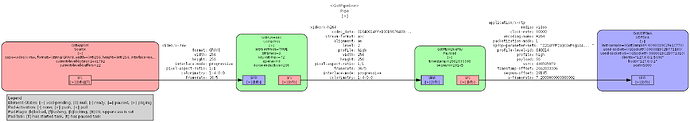

I’ve tested my pipeline (with appsrc replaced by videotestsrc and a capsfilter) by running it with gst-launch-1.0 (with GST_DEBUG_DUMP_DOT_DIR set.) Running my own application with GST_DEBUG_DUMP_DOT_DIR set, and calling Debug.BinToDotFile() after the 75th frame, I see the following:

The two topographies look sufficiently similar that I feel confident my GStreamer-Sharp application is mostly correct.

The rtph264pay log shows the following:

gstrtph264pay.c:423:gst_rtp_h264_pay_getcaps:<Payload> returning caps video/x-h264, stream-format=(string)avc, alignment=(string)au; video/x-h264, stream-format=(string)byte-stream, alignment=(string){ nal, au }

gstrtph264pay.c:423:gst_rtp_h264_pay_getcaps:<Payload> returning caps video/x-h264, stream-format=(string)avc, alignment=(string)au; video/x-h264, stream-format=(string)byte-stream, alignment=(string){ nal, au }

gstrtph264pay.c:414:gst_rtp_h264_pay_getcaps:<Payload> Intersect video/x-h264, stream-format=(string)avc, alignment=(string)au; video/x-h264, stream-format=(string)byte-stream, alignment=(string){ nal, au } and filter video/x-h264, framerate=(fraction)[ 0/1, 2147483647/1 ], width=(int)[ 1, 2147483647 ], height=(int)[ 1, 2147483647 ], stream-format=(string){ avc, byte-stream }, alignment=(string)au, profile=(string){ high-4:4:4, high-4:2:2, high-10, high, main, baseline, constrained-baseline, high-4:4:4-intra, high-4:2:2-intra, high-10-intra }

gstrtph264pay.c:423:gst_rtp_h264_pay_getcaps:<Payload> returning caps video/x-h264, framerate=(fraction)[ 0/1, 2147483647/1 ], width=(int)[ 1, 2147483647 ], height=(int)[ 1, 2147483647 ], stream-format=(string)avc, alignment=(string)au, profile=(string){ high-4:4:4, high-4:2:2, high-10, high, main, baseline, constrained-baseline, high-4:4:4-intra, high-4:2:2-intra, high-10-intra }; video/x-h264, framerate=(fraction)[ 0/1, 2147483647/1 ], width=(int)[ 1, 2147483647 ], height=(int)[ 1, 2147483647 ], stream-format=(string)byte-stream, alignment=(string)au, profile=(string){ high-4:4:4, high-4:2:2, high-10, high, main, baseline, constrained-baseline, high-4:4:4-intra, high-4:2:2-intra, high-10-intra }

gstrtph264pay.c:414:gst_rtp_h264_pay_getcaps:<Payload> Intersect video/x-h264, stream-format=(string)avc, alignment=(string)au; video/x-h264, stream-format=(string)byte-stream, alignment=(string){ nal, au } and filter video/x-h264, framerate=(fraction)[ 0/1, 2147483647/1 ], width=(int)[ 1, 2147483647 ], height=(int)[ 1, 2147483647 ], stream-format=(string){ avc, byte-stream }, alignment=(string)au, profile=(string){ high-4:4:4, high-4:2:2, high-10, high, main, baseline, constrained-baseline, high-4:4:4-intra, high-4:2:2-intra, high-10-intra }

gstrtph264pay.c:423:gst_rtp_h264_pay_getcaps:<Payload> returning caps video/x-h264, framerate=(fraction)[ 0/1, 2147483647/1 ], width=(int)[ 1, 2147483647 ], height=(int)[ 1, 2147483647 ], stream-format=(string)avc, alignment=(string)au, profile=(string){ high-4:4:4, high-4:2:2, high-10, high, main, baseline, constrained-baseline, high-4:4:4-intra, high-4:2:2-intra, high-10-intra }; video/x-h264, framerate=(fraction)[ 0/1, 2147483647/1 ], width=(int)[ 1, 2147483647 ], height=(int)[ 1, 2147483647 ], stream-format=(string)byte-stream, alignment=(string)au, profile=(string){ high-4:4:4, high-4:2:2, high-10, high, main, baseline, constrained-baseline, high-4:4:4-intra, high-4:2:2-intra, high-10-intra }

gstrtph264pay.c:1746:gst_rtp_h264_pay_sink_event:<Payload> New stream detected => Clear SPS and PPS

gstrtph264pay.c:1202:gst_rtp_h264_pay_send_bundle:<Payload> no bundle, nothing to send

gstrtph264pay.c:414:gst_rtp_h264_pay_getcaps:<Payload> Intersect video/x-h264, stream-format=(string)avc, alignment=(string)au; video/x-h264, stream-format=(string)byte-stream, alignment=(string){ nal, au } and filter video/x-h264, codec_data=(buffer)01640014ffe1001967640014f159010086c05b2000000300a000000911e28532c001000568efb2c8b0, stream-format=(string)avc, alignment=(string)au, level=(string)2, profile=(string)high, width=(int)256, height=(int)256, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)36/5, interlace-mode=(string)progressive, colorimetry=(string)1:4:0:0

gstrtph264pay.c:423:gst_rtp_h264_pay_getcaps:<Payload> returning caps video/x-h264, codec_data=(buffer)01640014ffe1001967640014f159010086c05b2000000300a000000911e28532c001000568efb2c8b0, stream-format=(string)avc, alignment=(string)au, level=(string)2, profile=(string)high, width=(int)256, height=(int)256, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)36/5, interlace-mode=(string)progressive, colorimetry=(string)1:4:0:0

gstrtph264pay.c:587:gst_rtp_h264_pay_setcaps:<Payload> have packetized h264

gstrtph264pay.c:606:gst_rtp_h264_pay_setcaps:<Payload> profile 640014

gstrtph264pay.c:612:gst_rtp_h264_pay_setcaps:<Payload> nal length 4

gstrtph264pay.c:615:gst_rtp_h264_pay_setcaps:<Payload> num SPS 1

gstrtph264pay.c:631:gst_rtp_h264_pay_setcaps:<Payload> SPS 0 size 25

gstrtph264pay.c:652:gst_rtp_h264_pay_setcaps:<Payload> num PPS 1

gstrtph264pay.c:663:gst_rtp_h264_pay_setcaps:<Payload> PPS 0 size 5

gstrtph264pay.c:1469:gst_rtp_h264_pay_handle_buffer:<Payload> got 861 bytes

gstrtph264pay.c:1488:gst_rtp_h264_pay_handle_buffer:<Payload> got NAL of size 2

gstrtph264pay.c:954:gst_rtp_h264_pay_payload_nal:<Payload> payloading NAL Unit: datasize=2 type=9 pts=1000:00:00.000000000

gstrtph264pay.c:1058:gst_rtp_h264_pay_payload_nal_fragment:<Payload> sending NAL Unit: datasize=2 mtu=1400

My pipeline is configured as follows:

Private Sub ConfigurePipeline()

Gst.Application.Init()

VInfo1 = New VideoInfo()

VInfo1.SetFormat(VideoFormat.Gray8, SrcSize.Width, SrcSize.Height)

VInfo1.FpsN = 72

VInfo1.FpsD = 10

VCaps = VInfo1.ToCaps()

Diagnostics.Debug.WriteLine(VCaps.ToString)

FrameInterval = VInfo1.FpsD / VInfo1.FpsN

FrameDuration = Util.Uint64ScaleInt(VInfo1.FpsD, Gst.Constants.SECOND, VInfo1.FpsN)

Dim FrameBytes As UInteger = 256 * 256

ReDim FrameData(FrameBytes - 1)

System.Array.Fill(FrameData, 127)

'Pipe = Parse.Launch("appsrc ! video/x-raw,format=GRAY8,width=256,height=256,framerate=72/10 " &

' "! x264enc tune=zerolatency qp-max=0 key-int-max=72 bframes=3 intra-refresh=1 noise-reduction=200 " &

' "! rtph264pay pt=96 ! udpsink host=127.0.0.1 port=5000")

Pipe = New Pipeline("Pipe")

PBus = Pipe.Bus

PBus.AddSignalWatch()

AddHandler PBus.Message, AddressOf Handle_PBus_Message

Source = New AppSrc("Source")

Compress = ElementFactory.Make("x264enc", "Compress")

Payload = ElementFactory.Make("rtph264pay", "Payload")

UDPSink = ElementFactory.Make("udpsink", "UDPSink")

Source.Caps = VCaps

Source.SetProperty("stream-type", New GLib.Value(AppStreamType.Stream))

Source.SetProperty("format", New GLib.Value(Gst.Constants.TIME_FORMAT))

Source.SetProperty("emit-signals", New GLib.Value(True))

AddHandler Source.NeedData, AddressOf Handle_Source_NeedData

AddHandler Source.EnoughData, AddressOf Handle_Source_EnoughData

Compress.SetProperty("tune", New GLib.Value("zerolatency"))

Compress.SetProperty("qp-max", New GLib.Value(0))

Compress.SetProperty("key-int-max", New GLib.Value(72))

Compress.SetProperty("bframes", New GLib.Value(3))

Compress.SetProperty("intra-refresh", New GLib.Value(1))

Compress.SetProperty("noise-reduction", New GLib.Value(200))

Payload.SetProperty("pt", New GLib.Value(96))

UDPSink.SetProperty("host", New GLib.Value("127.0.0.1"))

UDPSink.SetProperty("port", New GLib.Value(5000))

Pipe.Add(Source, Compress, Payload, UDPSink)

Source.Link(Compress)

Compress.Link(Payload)

Payload.Link(UDPSink)

Dim Result As StateChangeReturn = Pipe.SetState(State.Playing)

If Result = StateChangeReturn.Failure Then

Diagnostics.Debug.WriteLine("Unable to set the pipeline to the playing state")

Else

MainLoop = New MainLoop()

MainLoop.Run()

FrameTimer.Stop()

Diagnostics.Debug.WriteLine("Mainloop has exited, stopping pipeline")

Pipe.SetState(State.Null)

End If

Diagnostics.Debug.WriteLine("Disposing pipeline elements")

Pipe.Dispose()

Source.Dispose()

Compress.Dispose()

Payload.Dispose()

UDPSink.Dispose()

End Sub

The AppSrc.NeedData event handler starts a System.Timers.Timer which ticks at 7.2Hz, and the Timer.Elapsed event handler calls the following method that transfers data to the AppSrc:

Private Sub NewFrame()

Using GSTBuffer As New Buffer(FrameData)

GSTBuffer.Pts = Timestamp

GSTBuffer.Dts = Timestamp

GSTBuffer.Duration = FrameDuration

Timestamp += FrameDuration

Source.PushBuffer(GSTBuffer)

End Using

End Sub

The AppSrc.EnoughData event handler only prints a message to the console.

I’d be very grateful if someone could examine the above and make any suggestions for where to look for my mistake.

Thanks,

Tony