Hi there,

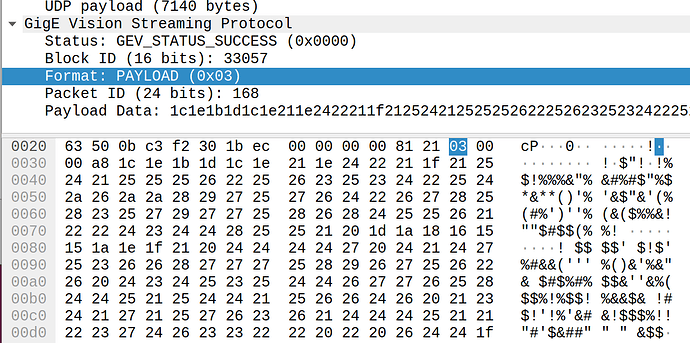

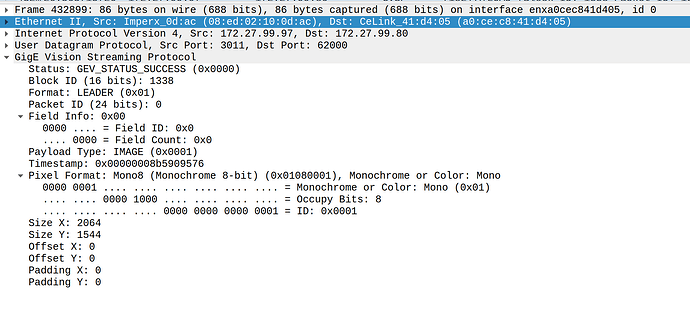

I have an Imperx GigE Vision camera and I am trying to create a simple pipeline that listens to its UDP port and displays the video stream, but the image is not displaying correctly. I verified that the image is coming through correctly in the Imperx utility, in the Imperx sample python scripts, and also using Aravis Viewer.

I’ve tried a zillion different combinations of caps and elements, pixel formats, buffer-size, etc, but I obviously won’t post all the things I’ve tried. Basically, +/- some variations, my pipeline looks like this.

I currently have the camera outputting just 8-bit grayscale

gst-launch-1.0 -e -v udpsrc port=62000 ! rawvideoparse width=2064 height=1544 framerate=10/1 format=25 ! queue ! "video/x-raw, format=(string)GRAY8" ! videoconvert ! ximagesink

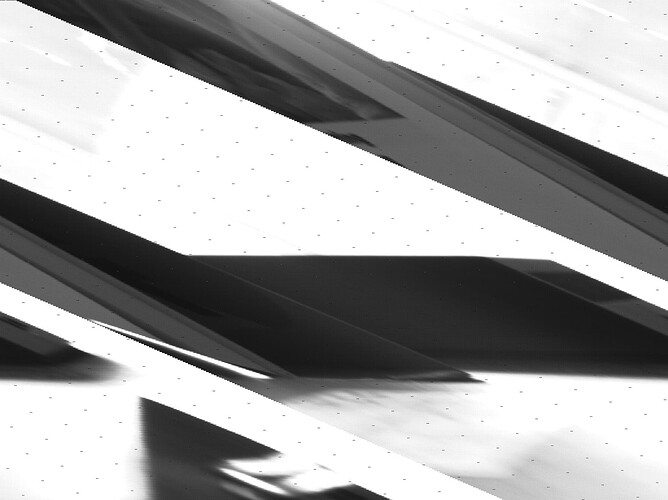

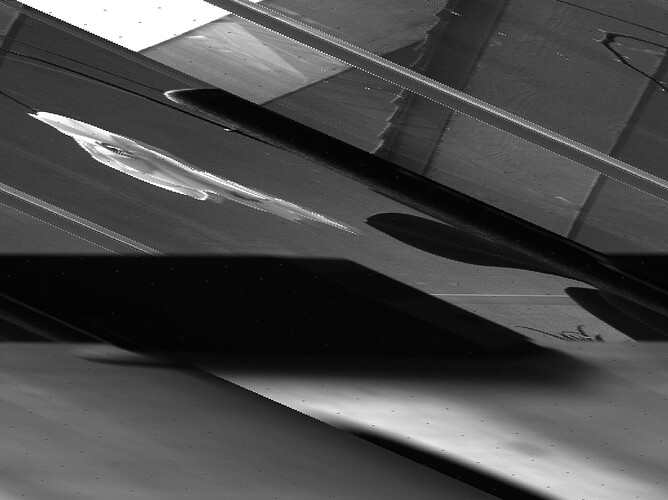

I’m getting an output that looks like this - slanted and somewhat repeated.

Most of the examples I’ve found online with udpsrc also use RTP, but i’m fairly confident this camera does not use RTP (it’s not mentioned anywhere in the manual, and my attempts to run a pipeline with rtp-related elements have all failed with “not a valid RTP payload” or whatever)

I WAS able to use the aravis plugin to get the pipeline to work, but it doesn’t seem to support color (all color options in aravis viewer are grayed out). And I do think this should work with just a udpsrc (right?)

Here’s the verbose output from my pipeline cited above

/GstPipeline:pipeline0/GstRawVideoParse:rawvideoparse0.GstPad:src: caps = video/x-raw, format=(string)GRAY8, width=(int)2064, height=(int)1544, interlace-mode=(string)progressive, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)10/1

/GstPipeline:pipeline0/GstQueue:queue0.GstPad:sink: caps = video/x-raw, format=(string)GRAY8, width=(int)2064, height=(int)1544, interlace-mode=(string)progressive, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)10/1

/GstPipeline:pipeline0/GstQueue:queue0.GstPad:src: caps = video/x-raw, format=(string)GRAY8, width=(int)2064, height=(int)1544, interlace-mode=(string)progressive, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)10/1

/GstPipeline:pipeline0/GstCapsFilter:capsfilter0.GstPad:src: caps = video/x-raw, format=(string)GRAY8, width=(int)2064, height=(int)1544, interlace-mode=(string)progressive, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)10/1

/GstPipeline:pipeline0/GstVideoConvert:videoconvert0.GstPad:src: caps = video/x-raw, width=(int)2064, height=(int)1544, interlace-mode=(string)progressive, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)10/1, format=(string)BGRx

/GstPipeline:pipeline0/GstXImageSink:ximagesink0.GstPad:sink: caps = video/x-raw, width=(int)2064, height=(int)1544, interlace-mode=(string)progressive, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)10/1, format=(string)BGRx

/GstPipeline:pipeline0/GstVideoConvert:videoconvert0.GstPad:sink: caps = video/x-raw, format=(string)GRAY8, width=(int)2064, height=(int)1544, interlace-mode=(string)progressive, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)10/1

/GstPipeline:pipeline0/GstCapsFilter:capsfilter0.GstPad:sink: caps = video/x-raw, format=(string)GRAY8, width=(int)2064, height=(int)1544, interlace-mode=(string)progressive, pixel-aspect-ratio=(fraction)1/1, framerate=(fraction)10/1

it does seem like there’s some inexplicable conversion to BGRx that I was trying unsuccessfully to make go away… maybe that’s an issue?

and the verbose output from this pipline (which does work):

gst-launch-1.0 -e -v aravissrc ! videoconvert ! queue ! xvimagesink

/GstPipeline:pipeline0/GstAravis:aravis0.GstPad:src: caps = video/x-raw, format=(string)GRAY8, width=(int)2064, height=(int)1544, framerate=(fraction)10/1

/GstPipeline:pipeline0/GstVideoConvert:videoconvert0.GstPad:src: caps = video/x-raw, width=(int)2064, height=(int)1544, framerate=(fraction)10/1, format=(string)YV12

/GstPipeline:pipeline0/GstQueue:queue0.GstPad:sink: caps = video/x-raw, width=(int)2064, height=(int)1544, framerate=(fraction)10/1, format=(string)YV12

/GstPipeline:pipeline0/GstQueue:queue0.GstPad:src: caps = video/x-raw, width=(int)2064, height=(int)1544, framerate=(fraction)10/1, format=(string)YV12

/GstPipeline:pipeline0/GstXvImageSink:xvimagesink0.GstPad:sink: caps = video/x-raw, width=(int)2064, height=(int)1544, framerate=(fraction)10/1, format=(string)YV12

/GstPipeline:pipeline0/GstVideoConvert:videoconvert0.GstPad:sink: caps = video/x-raw, format=(string)GRAY8, width=(int)2064, height=(int)1544, framerate=(fraction)10/1

I’ve been working on this for days and would really appreciate any guidance or suggestions for debugging.