HI all,

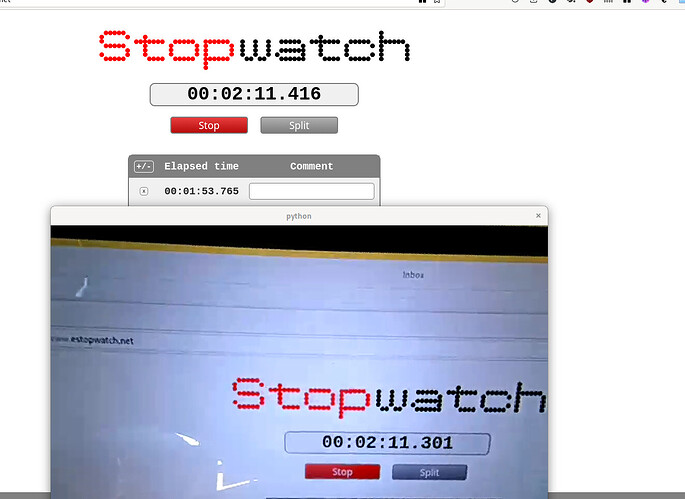

I am currently working on a project where I need to estimate the glass-to-glass latency of my system. I have a simple camera that I use to frame a precise timer, and I display the live captured frame on the same computer. I then take several screenshots to measure the time difference.

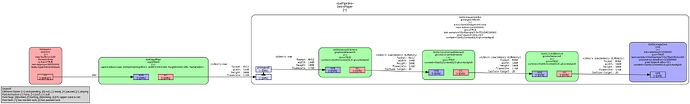

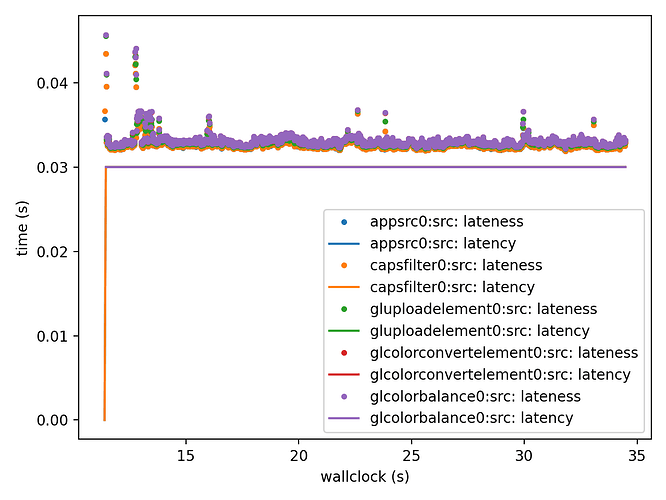

My GStreamer pipeline reports a latency of 45 milliseconds, with 30 milliseconds from the camera appsrc and 15 milliseconds of processing-deadline. This is in line with the data captured by the tracers.

However, the measured latency is still approximately 120 milliseconds. I am curious if there is any additional buffering occurring somewhere in the system, as the vsync is disabled.

I would greatly appreciate any insights or suggestions you might have regarding this issue

Fabien