This is a cross post of this question: Pipeline UDP sink generates many h264 errors - DeepStream SDK - NVIDIA Developer Forums

I have a video processing pipeline that I am trying to add a udp sink to, specifically I would like a simple mpeg-ts stream, not an rtp/rtsp stream.

My code to create the sink:

tl::expected<GstElement*, std::string> create_sink(const UdpSink& us) {

logger_->info("[create_sink] adding udp sink");

GstElement* udp_sink_bin = gst_bin_new("udp_sink_bin");

// ************************** create udp sink queue element ***********************************

GstElement* sink_queue = gst_element_factory_make("queue", "udp_sink_queue");

if (!sink_queue) {

return tl::unexpected("[create_sink] Failed to create udp_sink_queue");

}

gst_bin_add(GST_BIN(udp_sink_bin), sink_queue);

// link queue element to bin ghost pad

GstPad* queue_sink_pad = gst_element_get_static_pad(sink_queue, "sink");

if (!queue_sink_pad) {

return tl::unexpected("[create_sink][udp sink] failed to get queue sink pad");

}

// create the sink pad of our sink bin and connect it to the tee

if (!gst_element_add_pad(udp_sink_bin, gst_ghost_pad_new("sink", queue_sink_pad))) {

return tl::unexpected("[create_sink][udp] Failed to add ghost pad in udp sink bin");

}

gst_object_unref(queue_sink_pad);

// ************************** create udp sink convert element **********************************

GstElement* nvconv = gst_element_factory_make("nvvideoconvert", "udp_nvvideoconvert");

if (!nvconv) {

return tl::unexpected("[create_sink][udp] Failed to create nvvideoconvert");

}

gst_bin_add(GST_BIN(udp_sink_bin), nvconv);

if (!gst_element_link(sink_queue, nvconv)) {

return tl::unexpected("[create_sink][udp] Failed to link sink queue->nvvideoconvert");

}

// ************************** create udp sink caps filter **************************************

GstElement* capsfilter = gst_element_factory_make("capsfilter", "udp_capsfilter");

if (!capsfilter) {

return tl::unexpected("[create_sink][udp] Failed to create udp_capsfilter");

}

// caps for h264 HW decoder

GstCaps* caps = gst_caps_from_string("video/x-raw(memory:NVMM), format=I420");

g_object_set(G_OBJECT(capsfilter), "caps", caps, NULL);

gst_bin_add(GST_BIN(udp_sink_bin), capsfilter);

if (!gst_element_link(nvconv, capsfilter)) {

return tl::unexpected("[create_sink][udp] Failed to link sink nvvideoconvert->capsfilter");

}

gst_caps_unref(caps);

// ************************** create udp sink encoder element **********************************

GstElement* encoder = gst_element_factory_make("nvv4l2h264enc", "nvv4l2h264enc");

if (!encoder) {

return tl::unexpected("[create_sink] Failed to create nvv4l2h264enc");

}

g_object_set(G_OBJECT(encoder), "bitrate", (gint)us.bitrate, NULL);

g_object_set(G_OBJECT(encoder), "profile", 0, NULL);

g_object_set(G_OBJECT(encoder), "iframeinterval", 5, "idrinterval", 5, NULL);

if (is_jetson_gpu_) {

g_object_set(G_OBJECT(encoder), "preset-level", 1, NULL);

g_object_set(G_OBJECT(encoder), "insert-sps-pps", 1, NULL);

} else {

g_object_set(G_OBJECT(nvconv), "gpu-id", 0, NULL);

}

gst_bin_add(GST_BIN(udp_sink_bin), encoder);

if (!gst_element_link(capsfilter, encoder)) {

return tl::unexpected("[create_sink][udp] Failed to link sink caps_filter->nvv4l2h264enc");

}

// ************************** create udp sink h264parse element ********************************

GstElement* parser = gst_element_factory_make("h264parse", "h264parse");

if (!parser) {

return tl::unexpected("[create_sink] Failed to create h264parse for udp sink");

}

g_object_set(G_OBJECT(parser), "config-interval", -1, NULL);

gst_bin_add(GST_BIN(udp_sink_bin), parser);

if (!gst_element_link(encoder, parser)) {

return tl::unexpected("[create_sink][udp] Failed to link nvv4l2h264enc->h264parse");

}

// ************************** create udp sink mpegtsmux element ********************************

GstElement* mpegtsmux = gst_element_factory_make("mpegtsmux", "mpegtsmux");

if (!mpegtsmux) {

return tl::unexpected("[create_sink][udp] Failed to create mpegtsmux for udp sink");

}

gst_bin_add(GST_BIN(udp_sink_bin), mpegtsmux);

// get the h264parse src pad to link to

GstPad* parser_src_pad = gst_element_get_static_pad(parser, "src");

if (!parser_src_pad) {

return tl::unexpected("[create_sink][udp sink] failed to get h264parse src pad");

}

// request a sink pad from mpegtsmux

GstPad* sink_pad = gst_element_get_request_pad(mpegtsmux, "sink_%d");

if (!sink_pad) {

gst_object_unref(parser_src_pad);

return tl::unexpected("[create_sink][udp] failed to request sink pad on mpegtsmux");

}

if (auto res = gst_pad_link(parser_src_pad, sink_pad); res != GST_PAD_LINK_OK) {

gst_object_unref(parser_src_pad);

gst_object_unref(sink_pad);

return tl::unexpected(

fmt::format("[create_sink][udp] Linking of h264parse src and mpegtsmux sink pads failed. "

"GstPadLinkReturn value: {}",

(int)res));

}

gst_object_unref(parser_src_pad);

gst_object_unref(sink_pad);

// ************************** create udp sink element ******************************************

GstElement* udp_sink = gst_element_factory_make("udpsink", "udpsink");

g_object_set(G_OBJECT(udp_sink), "host", us.host.c_str(), "port", us.port, "async", FALSE,

"sync", 0, NULL);

if (!udp_sink) {

return tl::unexpected("[create_sink] Failed to create udpsink element");

}

gst_bin_add(GST_BIN(udp_sink_bin), udp_sink);

if (!gst_element_link(mpegtsmux, udp_sink)) {

return tl::unexpected("[create_sink][udp] Failed to link mpegtsmux -> udp_sink");

}

return udp_sink_bin;

}

which results in this pipeline when running:

I’ve limited my pipeline to 5 fps. Outside, of that, the source and inference portions of the pipeline are pretty standard. My overall sink bin has a tee with a fakesink, optionally a Display Sink and then optionally the UDP sink. I am running my pipeline inside a docker container.

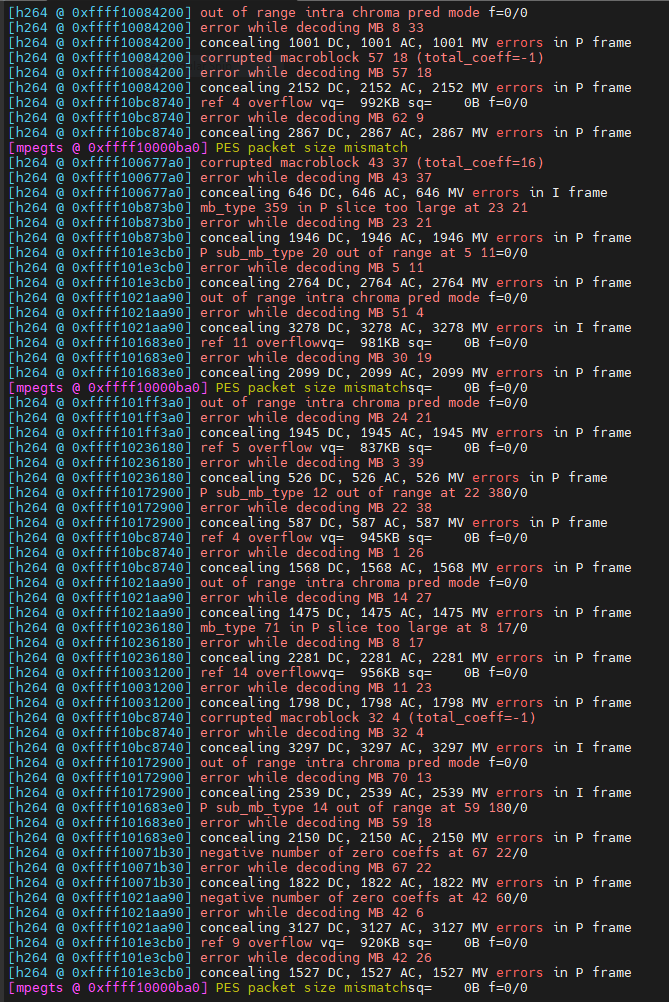

When trying to view the UDP stream on another system, there are many h.264 decoding errors and the output looks terrible.

results are similar if I run the pipeline from my laptop to my jetson or my jetson to the laptop. If I stream from the laptop just to outside the docker container on the laptop and then view the stream on my laptop, the results are much better, but still have a few errors and doesn’t seem to be quite right.

The network between the laptop and jetson is very good and so it seems strange that packets are simply being dropped for no reason. Any pointers on what elements I might not have setup correctly would be appreciated.