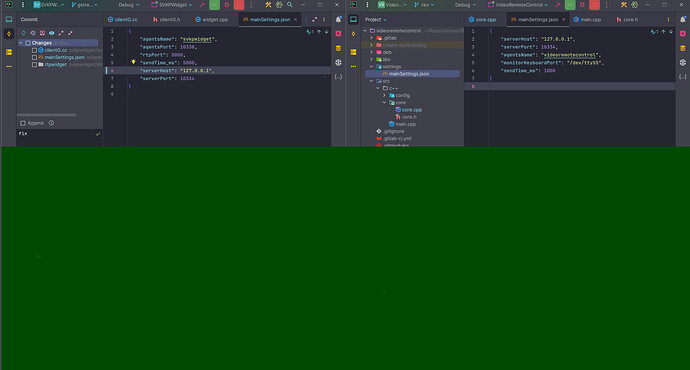

Have a nice day. There is a problem that I would like to solve. I transmit an image from the screen via rtp traffic and the first frame is always displayed, with some kind of green stripe and a different size each time, how can I overcome this?

code sink

const auto port = JsonSettings::get()->value("mainSettings", "clientPort").toInt();

const auto ipClient = JsonSettings::get()->value("mainSettings", "clientHost").toString();

const auto source = gst_element_factory_make("ximagesrc", "source");

const auto sink = gst_element_factory_make("udpsink", "sink");

const auto videoconvert = gst_element_factory_make("videoconvert", "videoconvert");

const auto x264enc = gst_element_factory_make("x264enc", "x264enc");

const auto rtph264pay = gst_element_factory_make("rtph264pay", "rtph264pay");

g_object_set(source, "startx", windowsStartX, NULL);

g_object_set(source, "endx", windowsEndX, NULL);

g_object_set(x264enc, "tune", PROPERTY_x264enc_TUNE, NULL);

g_object_set(x264enc, "bitrate", PROPERTY_x264enc_BITRATE, NULL);

g_object_set(x264enc, "speed-preset", PROPERTY_x264enc_SUPER_SPEED, NULL);

g_object_set(sink, "host", ipClient.toStdString().c_str(), NULL);

g_object_set(sink, "port", port, NULL);

if (!pipeline || !source || !sink || !videoconvert || !x264enc || !rtph264pay) {

g_printerr ("Not all elements could be created.\n");

return;

}

/* Add all elements into pipeline */

gst_bin_add_many (GST_BIN (pipeline), source, videoconvert, x264enc, rtph264pay, sink, NULL);

/* Link elements together */

gst_element_link(source, videoconvert);

gst_element_link(videoconvert, x264enc);

gst_element_link(x264enc, rtph264pay);

gst_element_link(rtph264pay, sink);

/* Set pipeline to "playing" state */

const auto ret = gst_element_set_state(pipeline, GST_STATE_PAUSED);

if (ret == GST_STATE_CHANGE_FAILURE) {

g_printerr ("Unable to set the pipeline to the playing state.\n");

gst_object_unref (pipeline);

return;

}

/* Wait until error or EOS */

const auto bus = gst_element_get_bus(pipeline);

const auto msg = gst_bus_timed_pop_filtered(

bus,

GST_CLOCK_TIME_NONE,

static_cast<GstMessageType>(GST_MESSAGE_ERROR | GST_MESSAGE_EOS));

/* Parse message */

if (msg != nullptr) {

GError *err;

gchar *debug_info;

switch (GST_MESSAGE_TYPE (msg)) {

case GST_MESSAGE_ERROR:

gst_message_parse_error (msg, &err, &debug_info);

g_printerr ("Error received from element %s: %s\n",

GST_OBJECT_NAME (msg->src), err->message);

g_printerr ("Debugging information: %s\n",

debug_info ? debug_info : "none");

g_clear_error (&err);

g_free (debug_info);

break;

case GST_MESSAGE_EOS:

g_print ("End-Of-Stream reached.\n");

break;

default:

/* We should not reach here because we only asked for ERRORs and EOS */

g_printerr ("Unexpected message received.\n");

break;

}

gst_message_unref (msg);

}

/* Free resources */

gst_object_unref (bus);

gst_element_set_state (pipeline, GST_STATE_NULL);

gst_object_unref (pipeline);

code src

gst_init (nullptr, nullptr);

const auto port = JsonSettings::get()->value("mainSettings", "agentsPort").toInt();

const auto script = std::string( "udpsrc port=" + std::to_string(port) + " caps = \"application/x-rtp, media=(string)video, clock-rate=(int)90000, encoding-name=(string)H264, payload=(int)96\" ! rtpjitterbuffer ! rtph264depay ! decodebin ! videoconvert ! ximagesink name=sink");

const auto parserElement = gst_parse_launch(script.c_str(), nullptr);

// const auto parserElement = gst_parse_launch("udpsrc port=5000 caps = \"application/x-rtp, media=(string)video, clock-rate=(int)90000, encoding-name=(string)H264, payload=(int)96\" ! rtph264depay ! decodebin ! videoconvert ! ximagesink name=sink", nullptr);

const auto pipeline = gst_pipeline_new("test-pipeline");

gst_bin_add_many (GST_BIN (pipeline), parserElement, NULL);

const auto ximagesink = gst_bin_get_by_name(GST_BIN(pipeline), "sink");

gst_video_overlay_set_window_handle(GST_VIDEO_OVERLAY(ximagesink), windId);

const auto ret = gst_element_set_state(pipeline, GST_STATE_PLAYING);

if (ret == GST_STATE_CHANGE_FAILURE) {

g_printerr ("Unable to set the pipeline to the playing state.\n");

gst_object_unref (pipeline);

return;

}

/* Wait until error or EOS */

GstBus* bus = gst_element_get_bus(pipeline);

GstMessage* msg = gst_bus_timed_pop_filtered(

bus,

GST_CLOCK_TIME_NONE,

static_cast<GstMessageType>(GST_MESSAGE_ERROR | GST_MESSAGE_EOS));

/* Parse message */

if (msg != nullptr) {

GError *err;

gchar *debug_info;

switch (GST_MESSAGE_TYPE (msg)) {

case GST_MESSAGE_ERROR:

gst_message_parse_error (msg, &err, &debug_info);

g_printerr ("Error received from element %s: %s\n",

GST_OBJECT_NAME (msg->src), err->message);

g_printerr ("Debugging information: %s\n",

debug_info ? debug_info : "none");

g_clear_error (&err);

g_free (debug_info);

break;

case GST_MESSAGE_EOS:

g_print ("End-Of-Stream reached.\n");

break;

default:

/* We should not reach here because we only asked for ERRORs and EOS */

g_printerr ("Unexpected message received.\n");

break;

}

gst_message_unref (msg);

}

/* Free resources */

gst_object_unref (bus);

gst_element_set_state (pipeline, GST_STATE_NULL);

gst_object_unref (pipeline);