Hello Tim,

I have attached the code files for your reference. Could you please take a look and help me resolve the issue in my code?

#include <gst/gst.h>

#include <stdio.h>

#include <gst/app/gstappsink.h>

#include <gst/app/gstappsrc.h>

#include <gst/gstbufferlist.h>

static GstBufferList *buflist, *copy_buflist;

static GstPad *identity_src_pad;

GstFlowReturn retval;

GstElement *pipeline, *v4l2src, *identity,*videoconvert, *queue, *valve, *x264enc, *mp4mux, *filesink;

GstBus *bus;

GstMessage *msg;

gboolean terminate = FALSE;

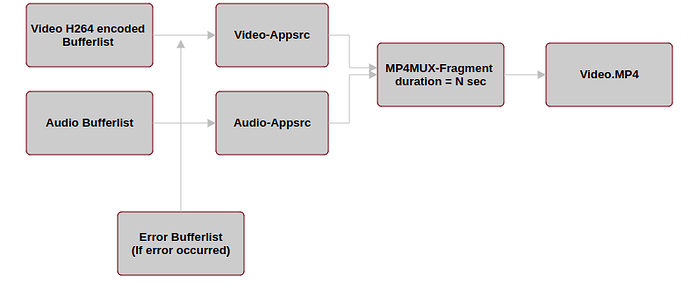

void convertToMP4(GstBufferList *buflist)

{

// Create pipeline for MP4 conversion.

GstElement *pipeline, *appsrc, *videoconvert, *videoencode, *muxer, *file_sink;

pipeline = gst_pipeline_new("MP4-pipeline");

appsrc = gst_element_factory_make("appsrc", "source");

muxer = gst_element_factory_make("mp4mux", "mp4-muxer");

file_sink = gst_element_factory_make("filesink", "filesink");

g_object_set(G_OBJECT(appsrc),

"stream-type", 0,

"format", GST_FORMAT_TIME, NULL);

g_object_set(G_OBJECT(muxer), "fragment-duration", 2000, NULL);

// Set the resolution and framerate caps

// GstCaps *caps = gst_caps_new_simple("video/x-raw",

// "width", G_TYPE_INT, 1280,

// "height", G_TYPE_INT, 720,

// "framerate", GST_TYPE_FRACTION, 10, 1,

// NULL);

// gst_app_src_set_caps (GST_APP_SRC(appsrc),caps);

// gst_caps_unref(caps);

g_object_set(file_sink, "location", "NEW_VIDEO.mp4", NULL);

gst_bin_add_many(GST_BIN(pipeline), appsrc,muxer, file_sink, NULL);

if (gst_element_link_many(appsrc,muxer, file_sink, NULL) != TRUE)

{

g_printerr("Elements could not be linked in the pipeline.\n");

gst_object_unref(pipeline);

exit(1);

}

copy_buflist = gst_buffer_list_copy_deep(buflist);

g_print("isbuffered is filled and Buffer size is %d\n", gst_buffer_list_length(copy_buflist));

gst_element_set_state(pipeline, GST_STATE_PLAYING);

retval = gst_app_src_push_buffer_list(GST_APP_SRC(appsrc), copy_buflist);

g_print("RETVAL %d\n", retval);

g_print("Sending EOS!!!!!!!");

g_signal_emit_by_name(appsrc, "end-of-stream", &retval);

}

static GstPadProbeReturn pad_probe_cb(GstPad *pad, GstPadProbeInfo *info, gpointer user_data)

{

printf("ERROR FRAMES PAD_PROBE");

static GstClockTime timestamp = 0;

GstMapInfo map;

GstBuffer *buff;

GstBuffer *new_buffer;

buff = gst_pad_probe_info_get_buffer(info);

gsize buffer_size = gst_buffer_get_size(buff);

double bufferSizeMB = static_cast<double>(buffer_size) / (1024 * 1024);

new_buffer = gst_buffer_copy_deep(buff);

// g_print(" timestamp : %ld\n", GST_BUFFER_PTS(new_buffer) / 1000000000);

if (bufferSizeMB > 0)

gst_buffer_list_add(buflist, new_buffer);

g_print("Buffer length is %d\n", gst_buffer_list_length(buflist));

if (gst_buffer_list_length(buflist) == 100)

{

convertToMP4(buflist);

gst_buffer_list_unref(buflist);

buflist = gst_buffer_list_new();

}

return GST_PAD_PROBE_OK;

}

void setValvevalue()

{

g_object_set(G_OBJECT(valve), "drop", false, NULL);

g_object_set(G_OBJECT(queue), "max-size-time", 0, "leaky", 0, NULL);

printf("drop value setted tp false\n");

}

int stopStream()

{

gst_element_set_state(pipeline, GST_STATE_NULL);

}

int start_recording_camera()

{

gst_init(NULL,NULL);

// Create GStreamer elements

pipeline = gst_pipeline_new("pipeline");

v4l2src = gst_element_factory_make("v4l2src", "v4l2-source");

videoconvert = gst_element_factory_make("videoconvert", "video-convert");

queue = gst_element_factory_make("queue", "queue");

valve = gst_element_factory_make("valve", "valve");

x264enc = gst_element_factory_make("x264enc", "x264-encoder");

identity = gst_element_factory_make("identity", "identity");

mp4mux = gst_element_factory_make("mp4mux", "mp4-muxer");

filesink = gst_element_factory_make("fakesink", "fake-sink");

if (!pipeline || !v4l2src || !videoconvert || !queue || !valve || !x264enc || !mp4mux || !filesink) {

g_printerr("One or more elements could not be created. Exiting.\n");

return -1;

}

// Set element properties

g_object_set(G_OBJECT(v4l2src), "device", "/dev/video0",NULL);

g_object_set(G_OBJECT(mp4mux), "fragment-duration", 2000, NULL);

g_object_set(G_OBJECT(valve), "drop", false, NULL);

g_object_set(G_OBJECT(x264enc), "tune", 4, NULL);

//g_object_set(G_OBJECT(filesink), "location", "NF_video.mp4", NULL);

// Build the pipeline

gst_bin_add_many(GST_BIN(pipeline), v4l2src, videoconvert, queue, valve,identity, x264enc, mp4mux, filesink, NULL);

gst_element_link_many(v4l2src, videoconvert,queue,valve, x264enc,identity, mp4mux, filesink, NULL);

identity_src_pad = gst_element_get_static_pad(identity, "src");

// // Create a buffer list and attach it to the pipeline user_data

buflist = gst_buffer_list_new();

// Add probe to the identity source pad

gst_pad_add_probe(identity_src_pad, GST_PAD_PROBE_TYPE_BUFFER, pad_probe_cb, pipeline, NULL);

// Set the pipeline to the "playing" state

gst_element_set_state(pipeline, GST_STATE_PLAYING);

}

void testAPI(int apiNumber) {

//std::thread startStreamThread;

switch (apiNumber) {

case 1:

printf("Testing startStream API\n");

// startStream(width,height);

start_recording_camera();

//startStreamThread = std::thread(startStream);

//startStreamThread.join(); // Wait for the thread to finish*/

break;

case 2:

printf("Testing setValvevalue API\n");

setValvevalue();

break;

case 3:

printf("Testing Stopstream API\n");

stopStream();

break;

default:

printf("Invalid API number\n");

break;

}

}

int main(int argc, char *argv[]) {

int apiNumber;

while (1) {

// Display options to the user

printf("Select an API to test:\n");

printf("1. startStream\n");

printf("2. SetValvevlaue\n");

printf("3. StopStream\n");

// printf("3. ERROR Buffer added\n");

printf("0. Exit\n");

printf("Enter API number: ");

// Read user input

scanf("%d", &apiNumber);

// Exit the loop if the user chooses 0

if (apiNumber == 0)

break;

// Call the selected API dynamically

testAPI(apiNumber);

// Clear the input buffer

while (getchar() != '\n')

continue;

}

printf("Exiting...\n");

return 0;

}

Errors

fault gstutils.c:3981:gst_pad_create_stream_id_internal:<source:src> Creating random stream-id, consider implementing a deterministic way of creating a stream-id

0:00:11.341708588 15745 0x7fbe04004f20 FIXME basesink gstbasesink.c:3145:gst_base_sink_default_event:<filesink> stream-start event without group-id. Consider implementing group-id handling in the upstream elements

0:00:11.342067623 15745 0x7fbe04004f20 WARN qtmux gstqtmux.c:4568:gst_qt_mux_add_buffer:<mp4-muxer> error: format wasn't negotiated before buffer flow on pad audio_0

0:00:11.342204438 15745 0x7fbe04004f20 WARN basesrc gstbasesrc.c:3055:gst_base_src_loop:<source> error: Internal data stream error.

0:00:11.342216224 15745 0x7fbe04004f20 WARN basesrc gstbasesrc.c:3055:gst_base_src_loop:<source> error: streaming stopped, reason not-negotiated (-4)

Compilation line

g++ Pre-event.cpp -o Pre-event pkg-config --cflags --libs gstreamer-1.0 pkg-config --libs gstreamer-app-1.0

Best regards,

Sulthan