Description:

Issue Summary:

I attempted to stream video using the provided GStreamer pipeline and HTTP server, but encountered several problems. Firstly, when accessing the stream via http://localhost:8080/, the video does not appear on the webpage. Additionally, when attempting to play the stream using VLC media player, it remains in a non-runnable state. Furthermore, I experimented with using an HLS sink, but encountered a minimum latency of 4 seconds, which does not meet my requirements. Subsequently, I tried using souphttpclientsink, but encountered difficulties.

Steps to Reproduce:

- Execute the provided GStreamer pipeline:

gst-launch-1.0 v4l2src device=/dev/video0 ! video/x-raw,width=640,height=480,framerate=30/1 ! videoconvert ! queue ! x264enc speed-preset=superfast tune=zerolatency ! h264parse config-interval=-1 ! mp4mux name=mux_av fragment-duration=10 ! souphttpclientsink location=http://localhost:8080/

- Attempt to access the stream via http://localhost:8080/ in a web browser.

- Try to play the stream using VLC media player.

Expected Result:

The video stream should be visible on the webpage when accessed via http://localhost:8080/, and VLC media player should be able to play the stream without issues.

Actual Result:

The video stream is not displayed on the webpage, and VLC media player fails to play the stream.

Additional Information:

I have also attempted to use an HLS sink to reduce latency, but the minimum latency achieved was 4 seconds, which is unsuitable for my use case. Therefore, I am seeking assistance in resolving these issues and successfully streaming video to both a web browser and VLC media player.

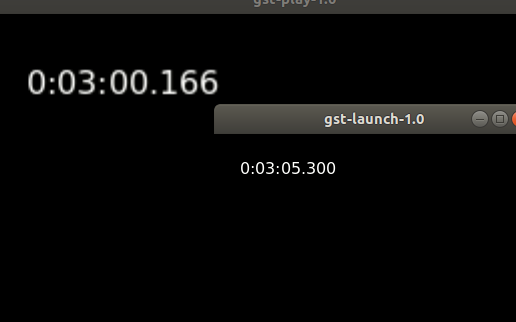

pipeline

gst-launch-1.0 v4l2src device="/dev/video0" ! videoconvert ! clockoverlay ! x264enc tune=zerolatency ! mpegtsmux ! hlssink playlist-root=http://192.168.xx.xx:8080 playlist-location=playlist_test.m3u8 location=segment_%05d.ts target-duration=1 max-files=4

Chrome - http://192.168.xx.xx:8080/playlist_test.m3u8

VLC - http://192.168.xx.xx:8080/playlist_test.m3u8

HTTP SERVER CODE: Server.js

var http = require('http');

http.createServer(function (req, res) {

// Set the content type to video/mp4

res.writeHead(200, {'Content-Type': 'video/mp4'});

// When data is received in the request, write it to the response

req.on('data', function(data) {

console.log('STREAM_DATA');

res.write(data);

});

// When the request ends, end the response

req.on('end', function() {

res.end();

});

}).listen(8080);

console.log('Server running at http://localhost:8080/');