Is there any example code (or guidance ) for how to set up alpha in a dynamic pipeline with VP9 encoded .webm files that have alpha? I have read the doc page for GstVideoCodecAlphaMeta but haven’t successfully used it with pad probes. I can set up a static pipeline using compositor and the alpha is transparent where expected, but when adding a video layer dynamically I can only get “alpha” settings to make the full overlay varying degrees of transparent, not the specific areas that are alpha in the overlay file. I am working in C but any code examples would be helpful.

/*this is the code for the dynamic pipeline that does not handle the alpha properly*/

#include <gst/gst.h>

#include <gst/video/video.h>

#include <glib.h>

#include <stdio.h>

static GstElement *pipeline, *base_src, *base_decodebin, *overlay_src, *overlay_decodebin;

static GstElement *base_convert, *overlay_convert, *compositor, *sink, *audio_convert, *audio_resample, *audio_sink;

static gboolean overlay_active = FALSE;

static GMainLoop *loop;

// Function prototypes

static void on_pad_added(GstElement *element, GstPad *new_pad, gpointer data);

static void on_error(GstBus *bus, GstMessage *msg, gpointer data);

static GstPadProbeReturn alpha_buffer_probe_cb(GstPad *pad, GstPadProbeInfo *info, gpointer user_data);

static gboolean handle_keyboard(GIOChannel *source, GIOCondition cond, gpointer data);

static GstPadProbeReturn alpha_buffer_probe_cb(GstPad *pad, GstPadProbeInfo *info, gpointer user_data) {

if (GST_PAD_PROBE_INFO_TYPE(info) & GST_PAD_PROBE_TYPE_BUFFER) {

GstBuffer *buffer = GST_PAD_PROBE_INFO_BUFFER(info);

GstVideoCodecAlphaMeta *alpha_meta = gst_buffer_get_video_codec_alpha_meta(buffer);

if (!alpha_meta) {

g_print("No alpha meta found in buffer\n");

}

}

return GST_PAD_PROBE_PASS;

}

static gboolean handle_keyboard(GIOChannel *source, GIOCondition cond, gpointer data) {

gchar *str = NULL;

gsize len = 0;

if (g_io_channel_read_line(source, &str, &len, NULL, NULL) == G_IO_STATUS_NORMAL) {

g_strchomp(str);

if (g_strcmp0(str, "s") == 0 && !overlay_active) {

overlay_active = TRUE;

// Dynamically create and add overlay elements

overlay_src = gst_element_factory_make("filesrc", "overlay_source");

overlay_decodebin = gst_element_factory_make("decodebin", "overlay_decodebin");

overlay_convert = gst_element_factory_make("videoconvert", "overlay_convert");

// Set the file location for the overlay video

g_object_set(overlay_src, "location", "/home/ken/Desktop/Spiral/SpiralData/spots.webm", NULL);

gst_bin_add_many(GST_BIN(pipeline), overlay_src, overlay_decodebin, overlay_convert, NULL);

gst_element_link(overlay_src, overlay_decodebin);

g_signal_connect(overlay_decodebin, "pad-added", G_CALLBACK(on_pad_added), overlay_convert);

// Manage overlay pad and link it

GstPad *src_pad = gst_element_get_static_pad(overlay_convert, "src");

GstPad *overlay_sink_pad = gst_element_request_pad_simple(compositor, "sink_%u");

gst_pad_link(src_pad, overlay_sink_pad);

g_object_set(overlay_sink_pad, "alpha", 0.2, NULL); // Adjust alpha transparency

gst_pad_add_probe(overlay_sink_pad, GST_PAD_PROBE_TYPE_BUFFER, alpha_buffer_probe_cb, NULL, NULL);

gst_element_sync_state_with_parent(overlay_src);

gst_element_sync_state_with_parent(overlay_decodebin);

gst_element_sync_state_with_parent(overlay_convert);

}

g_free(str);

}

return TRUE;

}

static void on_pad_added(GstElement *src, GstPad *new_pad, gpointer data) {

GstPad *sink_pad = gst_element_get_static_pad((GstElement *)data, "sink");

GstCaps *new_pad_caps = gst_pad_get_current_caps(new_pad);

const GstStructure *new_pad_struct = gst_caps_get_structure(new_pad_caps, 0);

const gchar *new_pad_type = gst_structure_get_name(new_pad_struct);

if (g_str_has_prefix(new_pad_type, "audio/x-raw")) {

g_print("Linking decoder to audio converter\n");

gst_pad_link(new_pad, gst_element_get_static_pad(audio_convert, "sink"));

gst_element_sync_state_with_parent(audio_convert);

} else if (g_str_has_prefix(new_pad_type, "video/x-raw")) {

if (!gst_pad_is_linked(sink_pad)) {

if (gst_pad_link(new_pad, sink_pad) != GST_PAD_LINK_OK) {

g_error("Failed to link video pads!");

}

}

}

gst_object_unref(sink_pad);

}

static void on_error(GstBus *bus, GstMessage *msg, gpointer data) {

GError *error = NULL;

gchar *debug_info = NULL;

if (GST_MESSAGE_TYPE(msg) == GST_MESSAGE_ERROR) {

gst_message_parse_error(msg, &error, &debug_info);

g_printerr("Error received from element %s: %s\n", GST_OBJECT_NAME(msg->src), error->message);

g_error_free(error);

g_printerr("Debugging information: %s\n", debug_info ? debug_info : "none");

g_free(debug_info);

gst_element_set_state(pipeline, GST_STATE_NULL);

g_main_loop_quit(loop);

}

}

int main(int argc, char *argv[]) {

gst_init(&argc, &argv);

loop = g_main_loop_new(NULL, FALSE);

// Construct base pipeline components

pipeline = gst_pipeline_new("video-mixer-pipeline");

base_src = gst_element_factory_make("filesrc", "base_source");

g_object_set(base_src, "location", "/home/ken/Desktop/Spiral/SpiralData/Spiral.avi", NULL);

base_decodebin = gst_element_factory_make("decodebin", "base_decodebin");

base_convert = gst_element_factory_make("videoconvert", "base_convert");

compositor = gst_element_factory_make("compositor", "compositor");

sink = gst_element_factory_make("autovideosink", "video_output");

audio_convert = gst_element_factory_make("audioconvert", "audio_convert");

audio_resample = gst_element_factory_make("audioresample", "audio_resample");

audio_sink = gst_element_factory_make("autoaudiosink", "audio_sink");

gst_bin_add_many(GST_BIN(pipeline), base_src, base_decodebin, base_convert, compositor, sink, audio_convert, audio_resample, audio_sink, NULL);

gst_element_link_many(base_src, base_decodebin, NULL);

g_signal_connect(base_decodebin, "pad-added", G_CALLBACK(on_pad_added), base_convert);

gst_element_link_many(base_convert, compositor, sink, NULL);

gst_element_link_many(audio_convert, audio_resample, audio_sink, NULL);

GIOChannel *io_stdin = g_io_channel_unix_new(fileno(stdin));

g_io_add_watch(io_stdin, G_IO_IN, (GIOFunc)handle_keyboard, NULL);

GstBus *bus = gst_element_get_bus(pipeline);

gst_bus_add_watch(bus, (GstBusFunc)on_error, loop);

gst_object_unref(bus);

gst_element_set_state(pipeline, GST_STATE_PLAYING);

g_main_loop_run(loop);

gst_element_set_state(pipeline, GST_STATE_NULL);

gst_object_unref(GST_OBJECT(pipeline));

g_main_loop_unref(loop);

return 0;

}

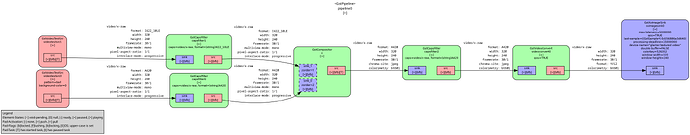

Ok, so you a using compositor, and videoconvert to combine two videos. This is expected to work of course. Can you add code to dump the dot graph of your pipeline. This will help debug the negotiated caps.

One thing I suspect may happen is that one of the color conversion endup stripping alpha before composition.

Ok, some odds stuff is happening but that one is not huge. The videoconverter transform A420 to AV12, its the same format, just different layout, yet I would have expected the compositor to work with A420.

The real issue is that output format of the compositor. The compositor is a bit simple, and it will transform all inputs, in this case I420_10LE and AV12 to its output format I420. As a side effect, the Alpha channel is lost. I’d try and force the output of the compositor to your preferred format with alpha, worse case you convert back to something opaque before display.

Here’s a test pipeline you can use as reference:

gst-launch-1.0 \

videotestsrc pattern=18 background-color=0 ! video/x-raw,format=A420 ! c.sink_0 \

videotestsrc ! video/x-raw,format=I422_10LE ! c.sink_1 \

compositor name=c sink_0::zorder=2 sink_1::zorder=1 \

video/x-raw,format=A420 ! videoconvert ! xvimagesink

Thanks! I’m working on it, will get back with results …

I haven’t gotten it working yet -

A couple of things -

- I’ve added this to main():

compositor = gst_element_factory_make("compositor", "compositor");

set_compositor_output_format(compositor); // Set the output format to A420

sink = gst_element_factory_make("autovideosink", "video_output");

and also tried adding this to ‘handle_keyboard’ (seems right?):

// Manage overlay pad and link it

GstPad *src_pad = gst_element_get_static_pad(overlay_convert, "src");

GstPad *overlay_sink_pad = gst_element_request_pad_simple(compositor, "sink_%u");

set_compositor_output_format(compositor);

gst_pad_link(src_pad, overlay_sink_pad);

and this function:

static void set_compositor_output_format(GstElement *compositor) {

GstCaps *caps = gst_caps_new_simple("video/x-raw",

"format", G_TYPE_STRING, "I422_10LE",

NULL);

GstPad *pad = gst_element_get_static_pad(compositor, "src");

gst_pad_set_caps(pad, caps);

gst_caps_unref(caps);

gst_object_unref(pad);

}

I’ve also tried it with ‘AV12’ and ‘VP9’ but no improvement.

-

in the pipeline graph I see that after going through ‘GstCodecAlphaDemux’ in the ‘GstVp9AlphaDecodeBin’, ‘codec-alpha’ is 'false". Is that relevant?

-

When I try to run your test pipeline I get the error: "WARNING: erroneous pipeline: no element “video” " and I don’t know why.

-

re: your suggestion to convert back to something with alpha, please explain - I’m not clear on where in the process to do that or how. In other words, how can I convert the overlay video to something with alpha after it’s been through the compositor?

thanks

You can’t really set caps on pads manually, use a capsfilter element instead (with its caps property).

Thanks, I will try to work with that.

I was able to solve the problem with the advice and sample code generously given to me by Profile - Honey_Patouceul - GStreamer Discourse

The code doesn’t

address the GstCodecVideoAlphaMeta question because it’s not necessary to set up manually if one sets up the pipelines as Honey has demonstrated.

#!/usr/bin/env python

import time

import gi

gi.require_version('Gst', '1.0')

from gi.repository import Gst

Gst.init(None)

# Background video source pipeline

p1 = Gst.parse_launch ('filesrc location=background.avi ! decodebin ! videoconvert ! video/x-raw,format=RGBA,width=1920,height=1080,framerate=24/1 ! intervideosink channel=background')

if not p1:

print('Failed to launch p1')

exit(-1)

# Video overlay pipeline

p2 = Gst.parse_launch ('filesrc location=movie-webm.webm ! matroskademux ! queue ! parsebin ! vp9alphadecodebin ! video/x-raw,format=A420 ! videoconvert ! video/x-raw,format=RGBA ! videoscale ! videorate ! video/x-raw,format=RGBA,width=1920,height=1080,framerate=24/1 ! intervideosink channel=overlay')

if not p2:

print('Failed to launch p2')

exit(-1)

# Composing pipeline

p3 = Gst.parse_launch ('compositor name=comp sink_0::zorder=1 sink_1::width=1920 sink_1::height=1080 sink_1::zorder=2 ! video/x-raw,format=RGBA,width=1920,height=1080,framerate=24/1 ! videoconvert ! autovideosink intervideosrc channel=background ! comp.sink_0')

if not p3:

print('Failed to launch p3')

exit(-1)

comp = p3.get_by_name("comp");

print('Starting without overlay')

p1.set_state(Gst.State.PLAYING)

p3.set_state(Gst.State.PLAYING)

# Run for 3s

time.sleep(3)

print('Add overlay')

p3.set_state(Gst.State.PAUSED)

newSinkPad = comp.request_pad_simple("sink_1")

overlay = Gst.ElementFactory.make("intervideosrc")

overlay.set_property("channel", "overlay")

p3.add(overlay)

overlay.sync_state_with_parent()

newSrcPad = overlay.get_static_pad("src")

t = newSrcPad.link(newSinkPad);

p2.set_state(Gst.State.PLAYING)

p3.set_state(Gst.State.PLAYING)

# Run for 5s

time.sleep(5)

print('Remove overlay')

p2.set_state(Gst.State.PAUSED)

p3.set_state(Gst.State.PAUSED)

newSrcPad.unlink(newSinkPad)

comp.remove_pad(newSinkPad);

p3.remove(overlay)

p3.set_state(Gst.State.PLAYING)

# Wait for 3s

time.sleep(3)

# Add overlay

print('Add overlay')

p3.set_state(Gst.State.PAUSED)

newSinkPad = comp.request_pad_simple("sink_1")

overlay = Gst.ElementFactory.make("intervideosrc")

overlay.set_property("channel", "overlay")

p3.add(overlay)

overlay.sync_state_with_parent()

newSrcPad = overlay.get_static_pad("src")

t = newSrcPad.link(newSinkPad);

p2.set_state(Gst.State.PLAYING)

p3.set_state(Gst.State.PLAYING)

# Run for 5s

time.sleep(5)

print('Done. Bye')

“the background video is first played alone, after 3s it adds the overlay, plays for 5s, removes the overlay, runs for 3s, adds again the overlay and plays for 5s.”

Thank you, Honey_Patouceul!