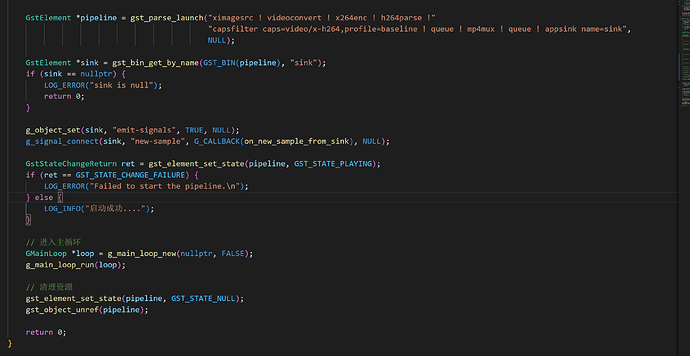

there is my code:

I capture the desktop using ximagesrc and retrieve an MP4 video stream through callbacks. When I include mp4mux in the command line, the callback is triggered only once and doesn’t execute again. However, when I remove mp4mux, the callback works as expected. Saving the file in MP4 format via the command line also works fine.

Thank you for your assistance.

Best regards,

yu.liu

gst-launch-1.0 -e videotestsrc ! videoconvert ! x264enc ! h264parse ! queue ! mp4mux ! fakesink dump=1

I tested with the above pipeline and observed that mp4mux pushes buffer once, and then pushes again when I send EOS by pressing CTRL+C.

So all your buffers are kept in mp4mux until you send EOS. Maybe you can try different mp4mux properties to make it work as you want. I tested “force-chunks=true” and “fragment-duration=5000” and saw that the behaviour changes.

If you are trying to stream mp4 chunks, splitmuxsink with “sink-factory=appsink” might be useful. You might want to look at hlssink too.

Do not forget to end your pipelines with EOS as otherwise mp4 files will not be playable.

Thank you for your response. I adjusted the parameters of mp4mux, and now the callbacks trigger correctly.

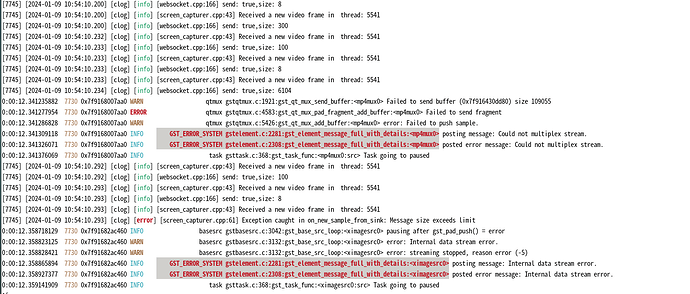

My goal is to send real-time video streams in mp4 format using WebSocket, but there is a significant delay, around 5 seconds. Is there a way to achieve real-time and low-latency streaming? Additionally, I encounter errors in GStreamer after running it for a while.

The error message is as follows:

thereis my common:

std::string pipelineDesc ="ximagesrc ! videoconvert ! x264enc ! h264parse ! mp4mux force-chunks=true faststart=true fragment-duration=10 streamable=true ! appsink name=sink ";

Unfortunately, I could not find the reason why you get that error. Maybe your buffers are too small. I would debug gstqtmux.c to see this, or maybe higher debug level for qtmux (GST_DEBUG=qtmux:7) would be more useful.

About latency, first set appsink’s sync property to false. When it is sync=true, appsink synchronizes to the clock, which makes it slower.

Then you can try this to plot the latency and see which element adds how much latency. Note that GStreamer tracers are not something I know well, and I am not affiliated with the repository I linked.

I tested what you are trying to do with the following two pipelines and it was fast enough in my case:

gst-launch-1.0 -e ximagesrc ! cudaupload ! cudaconvertscale ! cudadownload ! nvh264enc ! h264parse ! queue ! mp4mux force-chunks=true faststart=true fragment-duration=10 streamable=true ! tcpclientsink port=25566 sync=false

gst-launch-1.0 tcpserversrc port=25566 ! qtdemux ! h264parse ! nvh264dec ! glimagesink sync=false

WebSockets use TCP so I think using TCP for a quick comparison makes sense. I used GPU elements for scaling, encoding, decoding and displaying. Latency was about 1 second for streaming my two screens at the same time. Is that good enough for you?

Thank you, I have resolved the error, which was caused by WebSocket. I made adjustments to the pipelines :

std::string pipelineDesc = "ximagesrc ! videoconvert ! x264enc tune=zerolatency bitrate=6000 speed-preset=superfast "

"key-int-max=60 bframes=0 ! h264parse ! mp4mux force-chunks=true faststart=true "

“fragment-duration=10 streamable=true ! appsink name=sink sync=false”;

capture_ptr->startCapture(pipelineDesc);

but the latency still remains around 3-5 seconds. Since my environment cannot use GPU, is this the limit of MP4 + WebSocket? I’m unsure how to optimize it to achieve a performance similar to WebRTC。

Maybe MP4 is better for saving files, not really great for sending stuff over the net.

My test program is using 1.8G of memory, while WebRTC only takes up 170 M.

this might have gone wrong from the beginning…

In my setup, the absence of a GPU would raise costs. Thank you for your assistance, and Best wishes to you~

GST_DEBUG_COLOR_MODE=off GST_TRACERS="latency(flags=pipeline+element)" GST_DEBUG=GST_TRACER:7 GST_DEBUG_FILE=traces-stream.log gst-launch-1.0 -e ximagesrc ! videoconvert ! videoscale ! video/x-raw ! queue max-size-buffers=1 ! x264enc tune=zerolatency bitrate=6000 speed-preset=superfast key-int-max=60 bframes=0 ! queue max-size-buffers=1 ! h264parse ! video/x-h264,stream-format=avc ! mp4mux force-chunks=true faststart=true force-chunks=true faststart=true fragment-duration=10 ! queue2 max-size-buffers=1 max-size-time=1000000000 ! tcpclientsink max-lateness=100000000 port=25566 sync=false

GST_DEBUG_COLOR_MODE=off GST_TRACERS="latency(flags=pipeline+element)" GST_DEBUG=GST_TRACER:7 GST_DEBUG_FILE=traces.log gst-launch-1.0 tcpserversrc port=25566 ! qtdemux ! h264parse ! queue2 max-size-buffers=1 max-size-time=1000000000 ! avdec_h264 ! videoconvert ! glimagesink sync=false

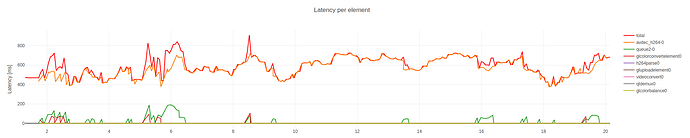

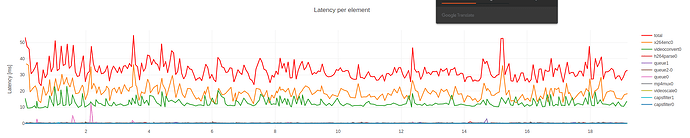

Receiver:

Streamer:

Plots are generated by the aforomentioned library.

Observed latency is about 1 second. I only used glimagesink, the only GPU element in my pipeline. I suppose you are going to display on browser so this should not matter.

I think you should be able to reduce the latency further, as my testing resolution is “3840x1161”, which is pretty high.

Thank you, and best wishes to you too.

Thanks for running those tests and sharing the data,I’ll conduct a latency test using your pipelines

1 Like