I am trying to use the webrtcsink element on a jetson nano and display live camera feed in client computer’s google chrome browser. However, I can’t seem to get the webrtcsink to show up as a “remote-stream” in the demo gstwebrtc-api web application.

I’m using GStreamer version 1.26.0 built from source on the jetson nano and my pipeline is:

GST_DEBUG=webrtc*:9 WEBRTCSINK_SIGNALLING_SERVER_LOG=debug gst-launch-1.0 videotestsrc is-live=true ! nvvidconv ! nvv4l2h264enc ! h264parse ! webrtcsink name=ws meta="meta,name=gst-stream" run-signalling-server=true video-caps=video/x-h264

I cloned the gstwebrtc-api repository and ran npm start on the client machine. I did change the index.html file to point to the other machines signalling server (i.e. signalingServerUrl: "ws://192.168.xx.xx:8443".

On the jetson device, I get the following:

Setting pipeline to PAUSED ...

Opening in BLOCKING MODE

2023-03-02T20:16:14.883879Z DEBUG ThreadId(01) spawn: gst_plugin_webrtc_signalling::server: new

2023-03-02T20:16:14.883938Z DEBUG ThreadId(01) spawn:new: gst_plugin_webrtc_signalling::handlers: new

2023-03-02T20:16:14.883959Z DEBUG ThreadId(01) spawn:new: gst_plugin_webrtc_signalling::handlers: close time.busy=3.02µs time.idle=19.0µs

2023-03-02T20:16:14.884018Z DEBUG ThreadId(01) spawn: gst_plugin_webrtc_signalling::server: close time.busy=71.0µs time.idle=75.2µs

Pipeline is live and does not need PREROLL ...

Setting pipeline to PLAYING ...

New clock: GstSystemClock

Redistribute latency...

NvMMLiteOpen : Block : BlockType = 4

===== NVMEDIA: NVENC =====

NvMMLiteBlockCreate : Block : BlockType = 4

H264: Profile = 66, Level = 0

NVMEDIA_ENC: bBlitMode is set to TRUE

Redistribute latency...

Redistribute latency...

2023-03-02T20:16:15.359904Z DEBUG ThreadId(01) accept_async: gst_plugin_webrtc_signalling::server: new

2023-03-02T20:16:15.363019Z DEBUG ThreadId(01) accept_async: tungstenite::handshake::server: Server handshake done.

2023-03-02T20:16:15.364066Z INFO ThreadId(01) accept_async: gst_plugin_webrtc_signalling::server: New WebSocket connection this_id=ebcd44c6-9cc9-456c-bbbb-8abf358daf4e

2023-03-02T20:16:15.364271Z DEBUG ThreadId(01) accept_async: gst_plugin_webrtc_signalling::server: close time.busy=3.55ms time.idle=846µs

2023-03-02T20:16:15.406678Z INFO ThreadId(01) gst_plugin_webrtc_signalling::server: Received message Ok(Text(Utf8Bytes(b"{\"type\":\"setPeerStatus\",\"roles\":[\"listener\"],\"meta\":{\"name\":\"WebClient-1744660204937\"}}")))

2023-03-02T20:16:15.407648Z DEBUG ThreadId(01) set_peer_status{peer_id="ebcd44c6-9cc9-456c-bbbb-8abf358daf4e" status=PeerStatus { roles: [Listener], meta: Some(Object {"name": String("WebClient-1744660204937")}), peer_id: None }}: gst_plugin_webrtc_signalling::handlers: new

2023-03-02T20:16:15.408096Z INFO ThreadId(01) set_peer_status{peer_id="ebcd44c6-9cc9-456c-bbbb-8abf358daf4e" status=PeerStatus { roles: [Listener], meta: Some(Object {"name": String("WebClient-1744660204937")}), peer_id: None }}: gst_plugin_webrtc_signalling::handlers: registered as a producer peer_id=ebcd44c6-9cc9-456c-bbbb-8abf358daf4e

2023-03-02T20:16:15.408473Z DEBUG ThreadId(01) set_peer_status{peer_id="ebcd44c6-9cc9-456c-bbbb-8abf358daf4e" status=PeerStatus { roles: [Listener], meta: Some(Object {"name": String("WebClient-1744660204937")}), peer_id: None }}: gst_plugin_webrtc_signalling::handlers: close time.busy=530µs time.idle=294µs

2023-03-02T20:16:15.412198Z INFO ThreadId(01) gst_plugin_webrtc_signalling::server: Received message Ok(Text(Utf8Bytes(b"{\"type\":\"list\"}")))

2023-03-02T20:16:15.412742Z DEBUG ThreadId(01) list_producers{peer_id="ebcd44c6-9cc9-456c-bbbb-8abf358daf4e"}: gst_plugin_webrtc_signalling::handlers: new

2023-03-02T20:16:15.413029Z DEBUG ThreadId(01) list_producers{peer_id="ebcd44c6-9cc9-456c-bbbb-8abf358daf4e"}: gst_plugin_webrtc_signalling::handlers: close time.busy=31.2µs time.idle=287µs

2023-03-02T20:16:45.416057Z INFO ThreadId(01) gst_plugin_webrtc_signalling::server: Received message Ok(Pong(b""))

2023-03-02T20:17:15.420336Z INFO ThreadId(01) gst_plugin_webrtc_signalling::server: Received message Ok(Pong(b""))

2023-03-02T20:17:45.421880Z INFO ThreadId(01) gst_plugin_webrtc_signalling::server: Received message Ok(Pong(b""))

2023-03-02T20:18:15.423618Z INFO ThreadId(01) gst_plugin_webrtc_signalling::server: Received message Ok(Pong(b""))

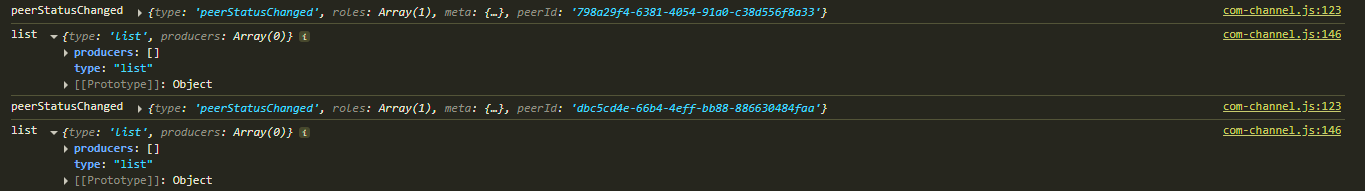

I added some log statements on the client side and I get

It seems like the webrtcsink element isn’t registered as a producer? I’m just not sure why its not showing up in remote streams?